Computer vision (CV) is reshaping industries with diverse applications, from self-driving cars to augmented reality, facial recognition systems, and medical diagnostics. “No industry has been or will be untouched by computer vision innovation, and the next generation of emerging technologies will generate new market opportunities and innovations, including: Scene understanding and fine-grained object and behavior recognition for security, worker health and safety, and critical patient care.” Despite the robust growth and increasing market value, computer vision still faces challenges.

Innovations like the shift from model-centric to data-centric artificial intelligence and the rise of Generative AI appear promising for tackling common computer vision challenges.

As we delve into five common problems, we’ll explore the solutions, and how they pave the way for a more advanced and efficient use of computer vision.

1. Variable lighting conditions

Problem:

One of the significant challenges for computer vision systems is dealing with varied lighting conditions. Changes in lighting can considerably alter the appearance of an object in an image, making it difficult for the system to recognize. The lighting challenges in computer vision is complex due to the difference between human visual perception and camera image processing. While humans can easily adjust to different lighting conditions, computer vision systems can struggle with it. Varying amounts of light in other parts of the combined with shadows and highlights distort the appearance of objects. Moreover, different types of light (e.g., natural, artificial, direct, diffused) can create other visual effects, further complicating the object recognition task for these systems.

Solution:

Techniques such as histogram equalization and gamma correction help counteract the effects of variable lighting conditions. Histogram equalization is a method that improves the contrast of an image by redistributing the most frequent intensity values across the image. At the same time, gamma correction adjusts the brightness of an image by applying a nonlinear operation to the pixel values. These methods adjust the brightness across an image, improving the system’s ability to identify objects irrespective of lighting conditions.

Another approach to the problem of variable lighting conditions involves using hardware solutions, such as infrared sensors or depth cameras. These devices can capture information that isn’t affected by lighting conditions, making object recognition more manageable. For instance, depth cameras can provide data about the distance of different parts of an object from the camera, which help identify the object even when lighting conditions make it difficult to discern its shape or color in a traditional 2D image. Similarly, infrared sensors can detect heat signatures, providing additional clues about an object’s identity.

2. Perspective and scale variability

Problem:

Objects can appear differently depending on their distance, angle, or size in relation to the camera. This variability in perspective and scale presents a significant challenge for computer vision systems. In remote sensing applications, accurate object detection from aerial images is more difficult due to the variety of objects that can be present, in addition to significant variations in scale and orientation.

Solution:

Techniques such as Scale-Invariant Feature Transform (SIFT), Speeded Up Robust Features (SURF), and similar methods can identify and compare objects in images regardless of scale or orientation.

SIFT is a method that can more reliably identify objects even among clutter and under partial occlusion, as it is an invariant to uniform scaling, orientation, and illumination changes. It also offers partial invariance to affine distortion. The SIFT descriptor is based on image measurements over local scale-invariant reference frames established by local scale selection. The SIFT features are local and based on the object’s appearance at particular interest points, making them invariant to image scale and rotation.

3. Occlusion

Problem:

Occlusion refers to scenarios where another object hides or blocks part of an object. This challenge varies depending on the context and sensor setup used in computer vision. For instance, in object tracking, occlusion occurs when an object being tracked is hidden by another object, like two people walking past each other or a car driving under a bridge. In range cameras, occlusion represents areas where no information is present because the camera and laser are not aligned, or in stereo imaging, parts of the scene that are only visible to one of the two cameras. This issue poses a significant challenge to computer vision systems as they may struggle to identify and track partially obscured objects correctly over time.

Solution:

“Techniques like Robust Principal Component Analysis (RPCA) can help separate an image’s background and foreground, potentially making occluded objects more distinguishable. RPCA is a modification of the principal component analysis (PCA) statistical procedure, which aims to recover a low-rank matrix from highly corrupted observations. In video surveillance, if we stack the video frames as matrix columns, the low-rank component naturally corresponds to the stationary background, and the sparse component captures the moving objects in the foreground.

Training models on datasets that include occluded objects can improve their ability to handle such scenarios. However, creating these datasets poses a challenge due to the requirement of a large number and variety of occluded video objects with modal mask annotations. A possible solution is to use a self-supervised approach to create realistic data in large quantities. For instance, the YouTube-VOI dataset contains 5,305 videos, a 65-category label set including common objects such as people, animals, and vehicles, with over 2 million occluded and visible masks for moving video objects. A unified multi-task framework, such as the Video Object Inpainting Network (VOIN), can infer invisible occluded object regions and recover object appearances. The evaluation of the VOIN model on the YouTube-VOI benchmark demonstrates its advantages in handling occlusions.

4. Contextual understanding

Problem:

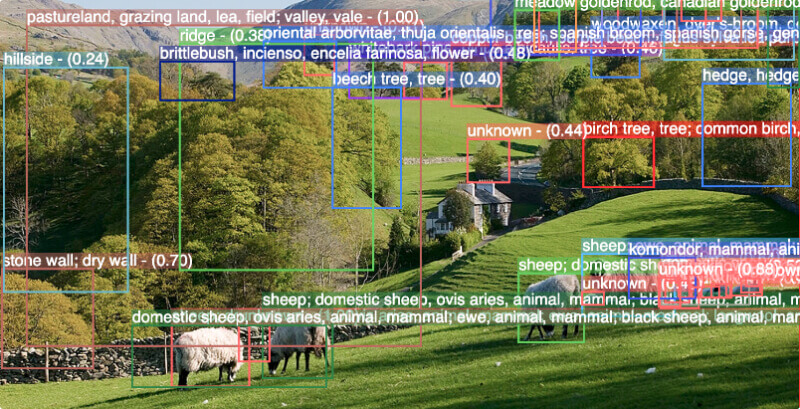

Computer vision systems often need help with understanding context. They can identify individual objects in an image, but understanding the relationship between them and interpreting the scene can be problematic.

Solution:

Scene understanding techniques are being developed to tackle this problem. One particularly challenging field within scene understanding is Concealed Scene Understanding (CSU), which involves recognizing objects with camouflaged properties in natural or artificial scenarios. The CSU field has advanced in recent years with deep learning techniques and the creation of large-scale public datasets such as COD10K, which has advanced the development of visual perception tasks, especially in concealed scenarios. A benchmark for Concealed Object Segmentation (COS), a crucial area within CSU, has been created for quantitative evaluation of the current state-of-the-art. Moreover, the applicability of deep CSU in real-world scenarios has been assessed by restructuring the CDS2K dataset to include challenging cases from various industrial settings.

Furthermore, incorporating Natural Language Processing (NLP) techniques such as Graph Neural Networks (GNNs) can help models understand relations between objects in an image. GNNs have become a standard component of many 2D image understanding pipelines, as they can provide a natural way to represent the relational arrangement between objects in an image. They have been especially used in tasks such as image captioning, Visual Question Answering (VQA), and image retrieval. These tasks require the model to reason about the image to describe it, explain aspects of it, or find similar images, which are all tasks that humans can do with relative ease but are difficult for deep learning models.

5. Lack of annotated data

Problem:

Training computer vision models necessitates a substantial amount of annotated data. Image annotation, a critical component of training AI-based computer vision models, involves human annotators structuring data. For example, images are annotated to create training data for computer vision models identifying specific objects across a dataset. However, manual annotation is a labor-intensive process that often necessitates domain expertise, and this process can consume a significant amount of time, particularly when dealing with large datasets.

Solution:

Semi-supervised and unsupervised learning techniques offer promising solutions to this issue. These methods leverage unlabeled data, making the learning process more efficient.

Semi-supervised learning (SSL) aims to jointly learn from sparsely labeled data and a large amount of unlabeled auxiliary data. The underlying assumption is that the unlabeled data is often drawn from the same distribution as the labeled data. SSL has been used in various application domains such as image search, medical data analysis, web page classification, document retrieval, genetics, and genomics.

Unsupervised learning (UL) aims to learn from only unlabeled data without utilizing any task-relevant label supervision. Once trained, the model can be fine-tuned using labeled data to achieve better model generalization in a downstream task.

Also, techniques like data augmentation can artificially increase the size of the dataset by creating altered versions of existing images.

Computer vision’s next frontier and AI’s role as its primary catalyst

Computer vision is an immensely beneficial technology with widespread applications, spanning industries from retail to manufacturing, and beyond. However, it has its challenges. Factors such as varied lighting conditions, perspective and scale variability, occlusion, lack of contextual understanding, and the need for more annotated data have created obstacles in the journey toward fully efficient and reliable computer vision systems.

Researchers and engineers continually push the field’s boundaries in addressing these issues. Techniques such as histogram equalization, gamma correction, SIFT, SURF, RPCA, and the use of CNNs, GNNs, and semi-supervised and unsupervised learning techniques, along with data augmentation strategies, have all been instrumental in overcoming these challenges.

Continued investment in research, development, and training of the next generation of computer vision scientists is vital for the field’s evolution. As computer vision advances, it will play an increasingly important role in driving efficiency and innovation in many sectors of the economy and society. Despite the challenges faced, the future of computer vision technology remains promising, with immense potential to reshape our world.

Computer vision platforms of tomorrow

The most recent wave of generative AI technologies will prove instrumental in shaping the next iterations of computer vision solutions. Today’s computer vision platforms use AI to detect events, objects, and actions that neural networks have been trained to identify, but tomorrow’s platforms may use AI to speculate the outcome of events, objects’ state or positions, and the results of actions before they occur.

The true challenge of today’s AI-powered vision-based systems is their narrow understanding. For a model to “know” how to spot more objects, it must be familiar with those things. More knowledge means more training and heavier models.

Our society is on the precipice of general AI, which will provide always-on, hyper-intelligent, digital assistants to tomorrow’s enterprises. Such assistants will not just know how to detect things it knows, but they will know how to learn and how to communicate what they see. Replicating human visual understanding has never been closer to a reality as it is today.

How can Chooch help you

Chooch has radically improved computer vision with generative AI to deliver AI Vision. Chooch combines the power of computer vision with language understanding to deliver more innovative solutions for analyzing video and text data at scale. Whether in the cloud, on premise, or at the edge, Chooch is helping businesses deploy computer vision faster to improve investment time to value.

Learn more about Chooch’s generative AI technology, ImageChat™ , which combines the power of computer vision with language understanding to deliver image-to-text capabilities. This remarkable advancement is simply a glimpse into what’s in store for the future. Try it yourself! Explore its potential and get the free app in the Google Play and App Store.

If you are interested in learning how Chooch AI Vision can help you, see how it works and request a demo today.