By Emrah Gultekin

As co-founder of Chooch and an entrepreneur constantly pursuing innovation and calculated risks, I’m in awe of the transformative potential that cutting-edge technologies like artificial intelligence hold. However, we can’t be blind to the risks that come with rapidly deploying such powerful capabilities at a massive scale before we fully understand their ramifications. Rather than stifling technological progress with heavy-handed regulation, we need a balanced approach—one that incentivizes the responsible development and implementation of AI, especially here in the Western world.

The Need for Responsible AI Practices

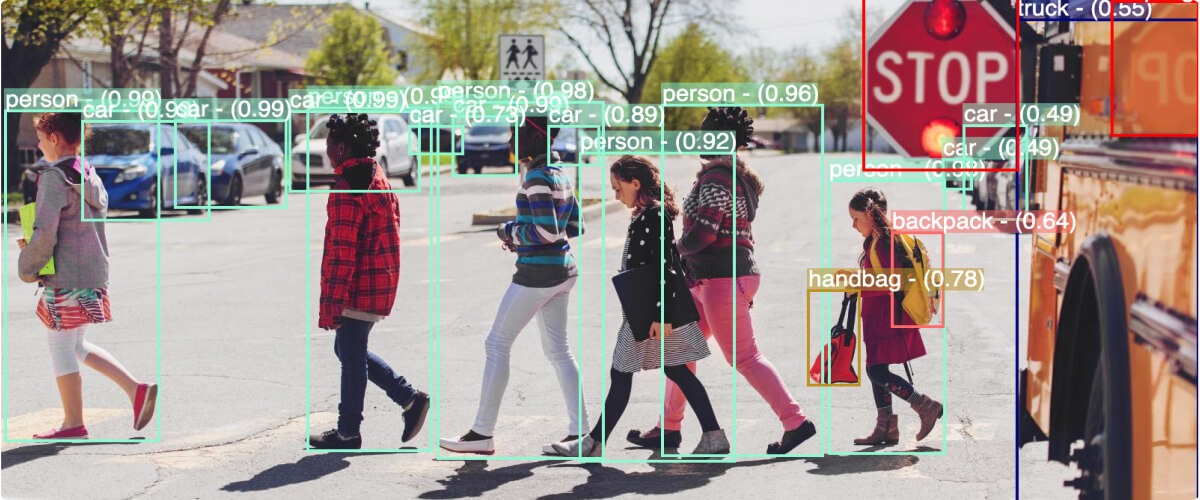

While government oversight has its place in managing potential dangers, I believe the private sector should take the lead in proactively establishing guardrails and best practices. We’re the ones closest to the technology, most invested in its success, and frankly, have the most to lose if we get this wrong. Responsible AI can’t just be an empty slogan—it requires a genuine commitment to ethics, transparency, and accountability from those of us building and deploying these systems.

My fellow entrepreneurs and I don’t just chase the next billion-dollar valuations—we want to create solutions that genuinely improve lives. That means rigorous testing to root out biases, implementing privacy-protecting techniques, and clear communication with the public whose trust we need to earn. Cutting corners for short-term gain is the antithesis of true innovation. Building robust, trustworthy AI is an opportunity to merge profitability with serving the greater good.

The West has been at the forefront of technological revolutions for centuries. As AI continues racing forward, we have a responsibility to steer it in an ethical direction before ceding its course to nations with different values. Collaboration between government and industry on progressive policies that reward doing the right thing can position Western nations as standard-bearers for AI governance on the global stage. The choice is ours—will we rise to that challenge?

Europe’s Productivity and Responsible AI Challenges

Many European countries face demographic challenges and productivity hurdles, indicating a potentially difficult future economic landscape. Implementing the AI Act in a way that hinders responsible AI innovation and deployment could further impede Europe’s productivity and progress, as AI offers unparalleled potential for driving efficiency and productivity gains akin to the transformative impact of computers. Promptly aligning incentives to foster responsible AI innovation is crucial for Europe’s future competitiveness.

Striking a Balance: Regulation and Responsible AI Innovation

While acknowledging the necessity of regulation to safeguard consumers, workers, and the environment, and to ensure ethical practices across industries, we must also recognize potential unintended consequences that could stifle responsible AI innovation and progress. Striking the right balance between regulation and fostering responsible AI innovation is of utmost importance.

The AI Act and Potential Pitfalls

The AI Act is a significant step towards ethical AI regulation, but we must carefully consider potential unintended consequences. Overly strict regulations may burden businesses with excessive compliance costs, create ambiguity, limit the scope of AI applications, and inadvertently introduce biases into AI systems—ultimately hindering responsible AI innovation.

Learning from History: Unintended Consequences of Regulation

History provides ample examples of well-intentioned regulations unintentionally hindering innovation, such as the UK’s Red Flag Act restricting early automobile development, and stringent FDA regulations limiting genetic testing innovation in the US. Additionally, the phenomenon of regulatory capture, where agencies become overly aligned with the industries they oversee, can favor established players and stifle competition for responsible AI solutions.

Fostering Responsible AI Innovation

While regulations play a vital role in safeguarding consumers and promoting ethical practices, they can inadvertently impede responsible AI innovation if not designed and implemented carefully. Policymakers and stakeholders must remain mindful of potential unintended consequences, promote competition, transparency, and fair market access for responsible AI solutions. Proactively monitoring and adapting regulations is crucial to minimize negative effects while fostering responsible AI innovation.

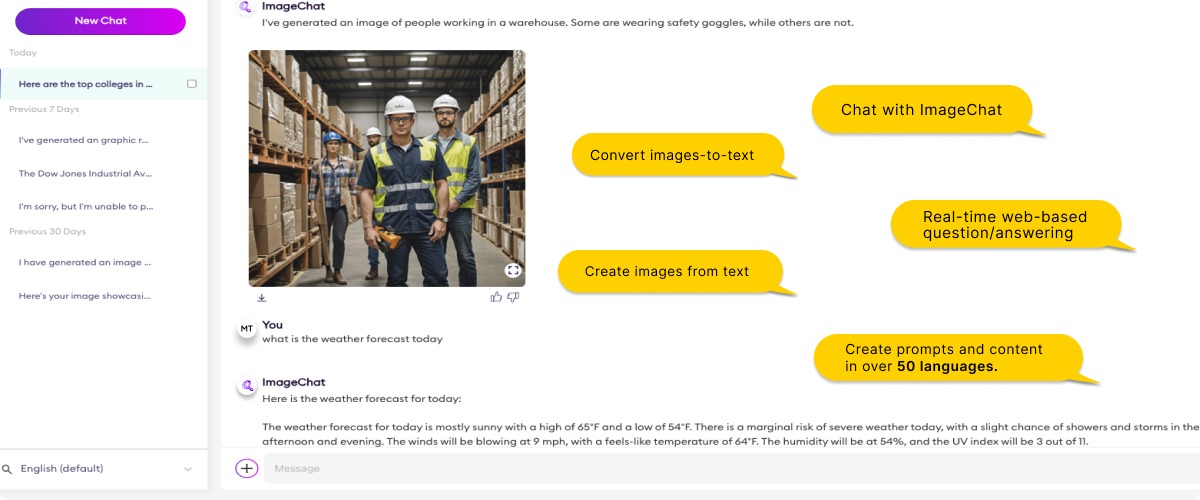

Chooch: Innovating for Responsible AI Solutions

Emrah Gultekin and Hakan Gultekin founded Chooch in 2015 with the goal of contributing to the next wave of responsible AI innovation. Their experiences highlighted the need for efficient, transparent solutions to help companies make informed decisions. Chooch aims to provide responsible AI tools that enable better decision-making. Learn more about Chooch and the Gultekin brothers in our blog, “What is Chooch.”