5G and edge computing are inextricably intertwined technologies: each one enables the other. Edge computing depends on fast speeds and low latency in order to transfer large quantities of data in near real time—exactly what 5G is good at providing. For its part, 5G needs applications such as edge computing in order to justify its rollout to wider coverage areas. 5G allows for more and more computing to be done at the edge where the users and devices are physically located, offering unprecedented connectivity and power. The rollout of new technology developments such as edge computing and the 5G wireless network standard has created waves of excitement and speculation across the entire industry.

Even better news: 5G and edge computing can act as force multipliers for applications such as computer vision and AI. Edge devices, connected to the vast Internet of Things (IoT) via 5G, can collect, process, and analyze images and videos themselves, without having to send this data to the cloud for processing.

5G and Edge Computing for AI and Computer Vision

Mobile industry organization GSMA, for example, predicts that by 2025, 5G connections will account for 20% of connections worldwide and 4% in North America. 5G is predicted to deliver speed improvements that are up to 10 times faster than the current 4G network—so it’s no wonder that users are rushing to adopt this latest innovation. Meanwhile, IT analyst firm Gartner projects that in 2022, half of enterprise data will be generated and processed on the edge, away from traditional data centers and cloud computing.

The possibilities of computer vision at the edge, powered by 5G, are nearly limitless. Edge computing and 5G can significantly enhance and unlock the potential of computer vision and AI technologies.

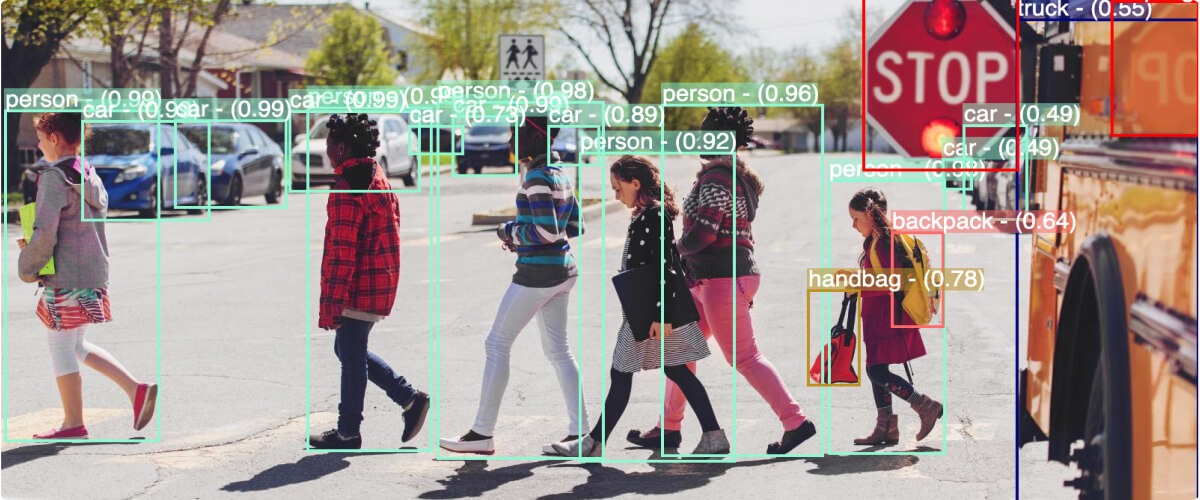

Here’s how the process typically works: an edge device captures visual data through a camera or other sensor, and then send

s it to the device’s GPU (graphics processing unit). An AI model stored on the device then uses the GPU to rapidly analyze the contents of these images or videos, and can immediately send off the results using 5G technology.

There are many different reasons you might prefer to run computer vision models on edge devices, rather than in the cloud:

- Since you don’t own the servers yourself, running AI models in the cloud is a recurring expense. On the other hand, edge devices allow you to make a single, relatively cheap capital investment that you then own and can operate as you please.

- As data volumes continue to rise, processing this data locally and independently can help deal with data bloat.

- Use cases such as self-driving cars depend on near-instantaneous, highly accurate insights, and can’t afford the latency of exchanging data with the cloud.

Below are a few more computer vision use cases in which high speed and high accuracy are of the essence:

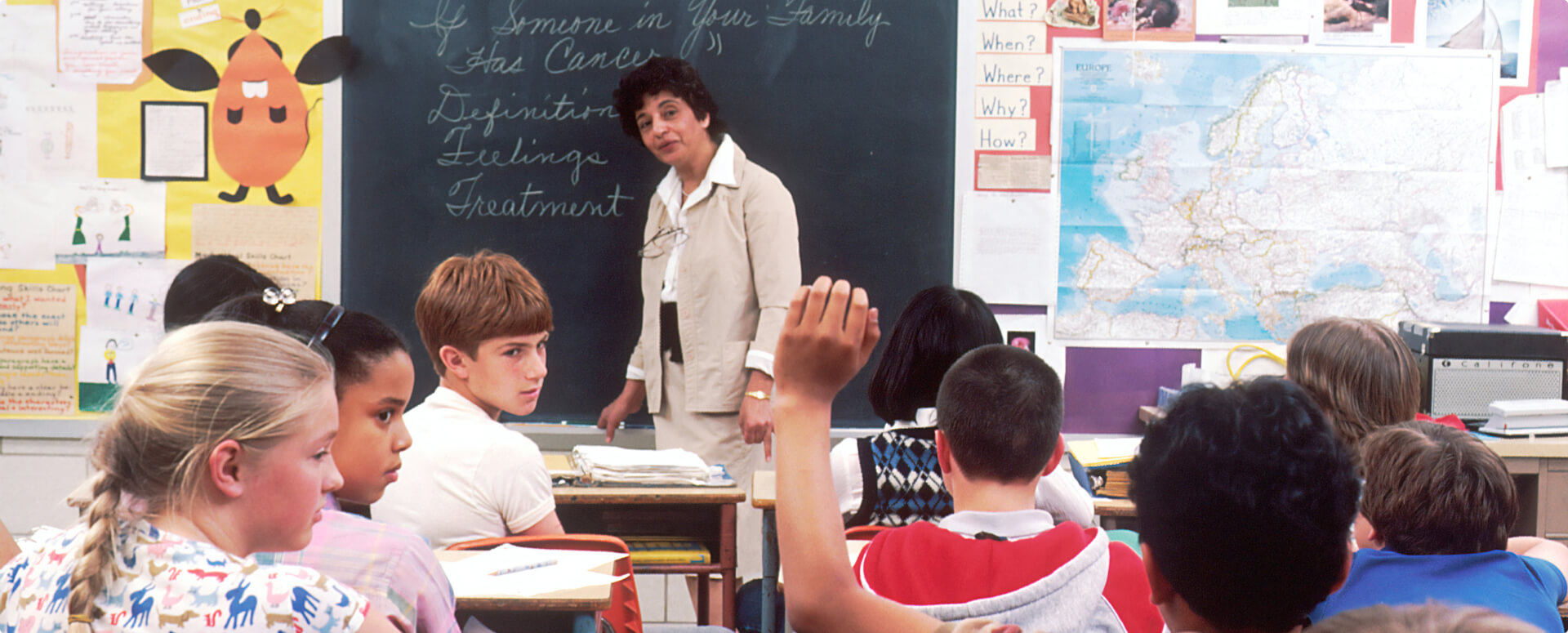

- Image recognition models can identify the objects and people in a given image, accurately choosing from hundreds or thousands of categories. For example, edge devices can examine surveillance videos at a construction site to ensure that all workers are wearing PPE (personal protective equipment).

- Facial authentication models can determine whether a given individual is authorized to access a restricted area, with very high accuracy, in just a fraction of a second.

- Event detection models analyze streams of visual data over time to detect a given event, such as smoke and fire detection or fall detection.

In all of these use cases and more, edge computing and 5G can help boost speeds and lower latency, delivering immediate value for local users.

Case Study: Wind River 5G Edge AI

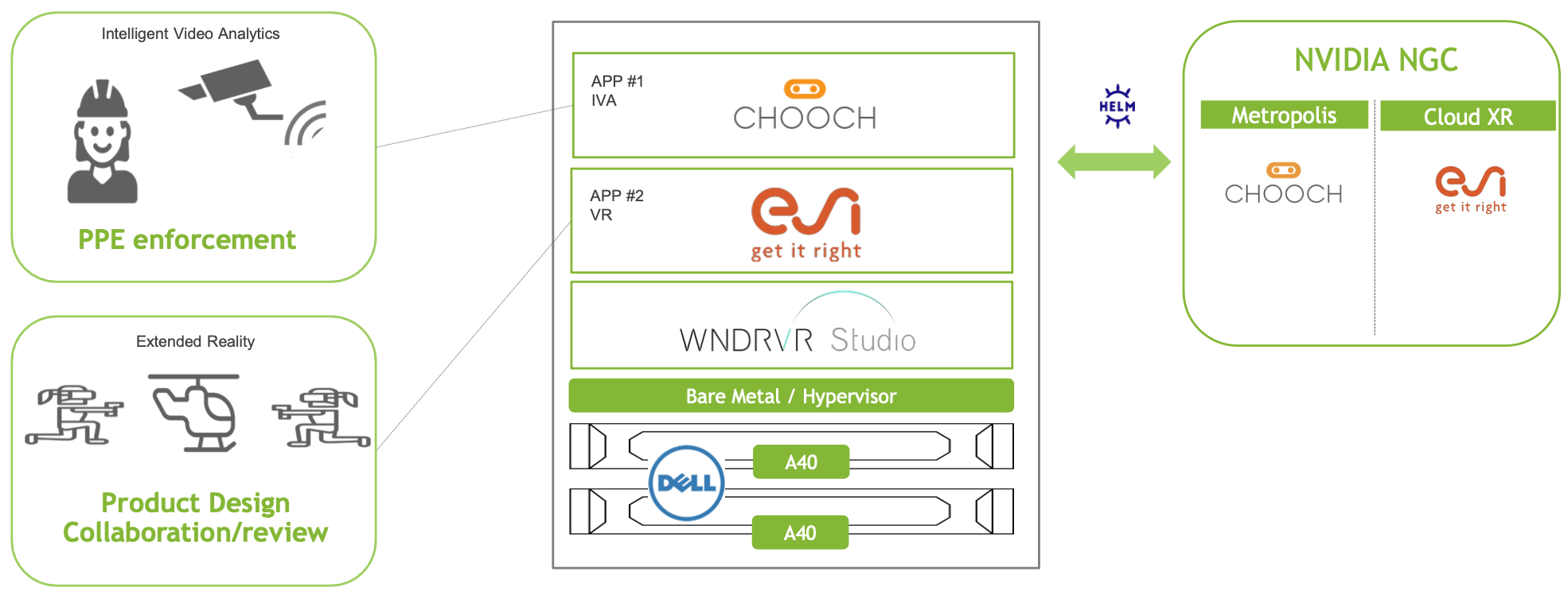

Wind River is a leading technology firm that builds software for embedded systems and edge computing. The company recently partnered with NVIDIA—which builds powerful, compact edge devices like the NVIDIA Jetson—to create a converged technology stack with multiple edge functions, including 5G, intelligent video analytics, and augmented and virtual reality.

The company’s demo, which was showcased at NVIDIA’s GPU Technology Conference (GTC) 2021, was built together with Chooch and other technology partners. Chooch helped provide multiple containerized solutions for intelligent video analytics and computer vision, with use cases ranging from safety and surveillance to healthcare and manufacturing. Powered by NVIDIA’s GPUs, Wind River and Chooch could demonstrate the tremendous potential that edge computing, now enabled by 5G, can have for users of all industries.

Want to learn more? Check out this short presentation from Wind River’s Gil Hellmann at NVIDIA’s GTC 2021. Contact us to learn more about Chooch Vision AI solutions, and how they may benefit your business.