Learn more about computer vision and AI with this short list of top-level computer vision and AI terminology. Don’t know what AI training is? An AI model? Image recognition? What is an edge device? Find out from these quick computer vision definitions.

- Action recognition: A subfield of computer vision that seeks to identify when a person is performing an action, such as running, sleeping, or falling.

- AI API: An application programming interface (API) for users to gain access to artificial intelligence tools and functionality. By offering third-party AI services, AI APIs save developers from having to build their own AI in-house.

- AI computer: Any computer that can perform computations for artificial intelligence and machine learning, i.e. performing AI training and running AI models. Thanks to recent technological advances, even modest consumer-grade hardware is now capable of being an AI computer, equipped with a powerful CPU and GPU.

- AI demo: A demonstration of the features and capabilities of an AI platform, or of artificial intelligence in general.

- AI model: The result of training an AI algorithm, given the input data and settings (known as “hyperparameters”). An AI model is a distilled representation that attempts to encapsulate everything that the AI algorithm has learned during the training process. AI models can be shared and reused on new data for use in real-world environments.

- AI platform: A software library or framework for users to build, deploy, and manage applications that leverage artificial intelligence. AI platforms are less static and more extensive than AI APIs: whereas AI APIs return the results of a third-party pre-trained model, AI platforms allow users to create their own AI models for different purposes.

- AI training: The process of training one or more AI models. During the training process, AI models “learn” over time by looking at more and more input data. After making a prediction about a given input, the AI model discovers whether its prediction was correct; if it was incorrect, it adjusts its parameters to account for the error.

- Algorithm: A well-defined, step-by-step procedure that can be implemented by a computer. Algorithms must eventually terminate and are used to perform a particular task or provide the answer to a certain problem.

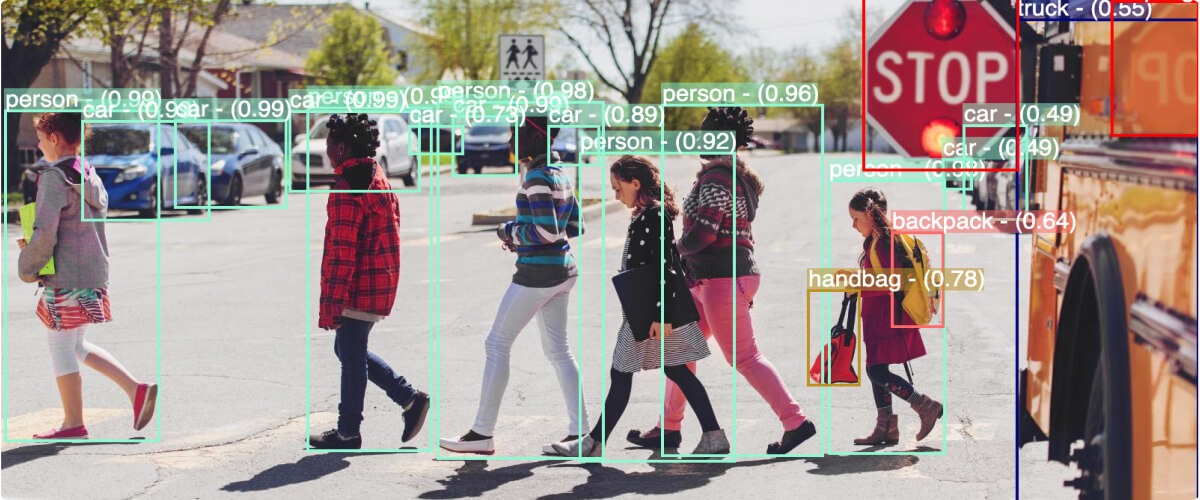

- Annotation: The process of labeling the input data in preparation for AI training. In computer vision, the input images and video must be annotated according to the task you want the AI model to perform. For example, if you want the model to perform image segmentation, the annotations must include the location and shape of each object in the image.

- Anomaly detection: A subfield of AI, machine learning, and data analysis that seeks to identify anomalies or outliers in a given dataset. Anomaly detection is applicable across a wide range of industries and use cases: for example, it can help discover instances of bank fraud or defects in manufacturing equipment.

- Artificial general intelligence (AGI): A type of artificial intelligence that can accomplish a wide variety of tasks as well as, or even better than, human beings. So far, attempts to build an AGI have been unsuccessful.

- Artificial intelligence (AI): A field of computer science that seeks to bring intelligence to machines, usually by simulating human thought and action. AI enables computers to learn from experience and adjust to unseen inputs.

- Artificial Intelligence of Things (AIoT) :The intersection of artificial intelligence with the Internet of Things: a vast, interconnected network of devices and sensors that communicate and exchange information via the Internet. Data collected by IoT devices is then processed by AI models. Common AIoT use cases include wearable technology and smart home devices.

- Artificial narrow intelligence (ANI): A type of artificial intelligence that, in contrast with an AGI, is designed to focus on a singular or limited task (e.g., playing chess or classifying photos of dog breeds).

- Artificial neural network (ANN): Also, just called a “neural network,” a machine learning model that consists of many interconnected artificial “neurons.” These neurons exchange information, roughly simulating the human brain. ANNs are the foundation of deep learning, a subfield of machine learning.

- Backpropagation: The main technique by which ANNs learn. In backpropagation, the weights of the connections between neurons are modified via gradient descent so that the network will give an output closer to the expected result.

- Bayesian network: A probabilistic model in the form of a graph that defines the conditional probability of different events (e.g., the probability of event A happening, given that event B does or does not happen).

- Big data: The use of datasets that are too large and/or complex to be analyzed by humans or traditional data processing methods. Big data may present challenges in terms of velocity (i.e., the speed at which it arrives) or veracity (i.e. maintaining high data quality).

- Chatbot: A computer program that uses natural language processing methods to conduct realistic conversations with human beings. Chatbots are frequently used in fields such as customer support (e.g., answering simple questions or processing item returns).

- Computer vision: A subfield of computer science, artificial intelligence, and machine learning that seeks to give computers a rapid, high-level understanding of images and videos, “seeing” them in the same way that human beings do. In recent years, computer vision has made great strides in accuracy and speed, thanks to deep learning and neural networks.

- Computer vision platform: An IT solution for building and deploying computer vision applications, bundling together a software development environment with a set of associated computer vision resources.

- Computer vision solution :A tool or platform that helps users integrate computer vision into their workflows, even without in-depth knowledge of computer vision or AI. Thanks to the wide range of applications for computer vision, from healthcare and retail to manufacturing and security, businesses of all sizes and industries are increasingly adopting computer vision solutions.

- Convolutional neural network (CNN): A special type of neural network that uses a mathematical operation known as a “convolution” to combine inputs (e.g., nearby pixels in an image). CNNs excel at higher-dimensional input such as images and videos.

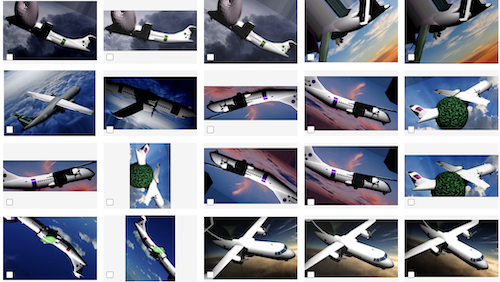

- Data augmentation: A technique to increase the size of your datasets by making slight modifications to the existing images in the dataset. For example, you can rotate, flip, scale, crop, or shift an image in multiple ways to create dozens of augmented images. Incorporating augmented data can help the model learn to generalize better instead of overfitting to recognize the images themselves.

- Data collection: The process of accumulating large quantities of information for use in training an AI model. Data can be collected from proprietary sources (e.g. your own videos) or from publicly available datasets, such as the ImageNet database. Once collected, data must be annotated or tagged for use in AI training.

- Data mining: The use of automated techniques to uncover hidden patterns and insights in a dataset and make smarter data-driven predictions and forecasts. Data mining is widely used in fields such as marketing, finance, retail, and science.

- Deep learning: A subfield of artificial intelligence and machine learning that uses neural networks with multiple “hidden” (deep) layers. Thanks to both algorithmic improvements and technological advancements, recent years have seen deep learning successfully used to train AI models that can perform many advanced human-like tasks—from recognizing speech to identifying the contents of an image.

- Defect detection:A subfield of computer vision that seeks to identify defects, errors, anomalies, and issues with products or machinery.

- Dense classification: A method for training deep neural networks from only a few examples, first proposed in the 2019 academic paper “Dense Classification and Implanting for Few-Shot Learning” by Lifchitz et al. Broadly, dense classification encourages the network to look at all aspects of the object it seeks to identify, rather than focusing on only a few details.

- Digital ecosystem: An interconnected collection of IT resources (such as software applications, platforms, and hardware) owned by a given organization, acting as a unit to help the business accomplish its goals.

- Edge AI: The use of AI and machine learning algorithms running on edge devices to process data on local hardware, rather than uploading it to the cloud. Perhaps the greatest benefit of Edge AI is faster speeds (since data does not have to be sent to and from the cloud back and forth), enabling real-time decision-making.

- Edge device: An Internet-connected hardware device that is part of the Internet of Things (IoT) and acts as a gateway in the IoT network: on one hand, the local sensors and devices that collect data; on the other, the full capability of IoT in the cloud. For fastest results, many edge devices are capable of performing computations locally, rather than offloading this responsibility to the cloud.

- Edge platform: An IT software development environment that simplifies the process of deploying and maintaining edge devices.

- Ensemble learning: The use of predictions from multiple AI models trained on the same input (or samples of the same input) to reduce error and increase accuracy. Due to natural variability during the training phase, different models may return different results given the same data. Ensemble learning combines the predictions of all these models (e.g. by taking a majority vote) with the goal of improving performance.

- Event detection: A subfield of computer vision that analyzes visual data (i.e. images or videos) in order to detect when an event has occurred. Event detection has been applied successfully to use cases such as fall detection and smoke and fire detection.

- Facial authentication: A subfield of facial recognition that seeks to verify a person’s identity, usually for security purposes. Facial authentication is often performed on edge devices that are powerful enough to identify a subject almost instantaneously and with a high degree of accuracy.

- Facial recognition :The use of human faces as a biometric characteristic by examining various facial features (e.g. the distance and location of the eyes, nose, mouth, and cheekbones). Facial recognition is used both for facial authentication (identifying individual people with their consent) as well as in video surveillance systems that capture people’s images in public.

- Generative adversarial network (GAN): A type of neural network that attempts to learn through competition. One network, the “generator,” attempts to create realistic imitations of the training data (e.g., photos of human faces). The other network, the “discriminator,” attempts to separate the real examples from the fake ones.

- Genetic algorithm: A class of algorithms that takes inspiration from the evolutionary phenomenon of natural selection. Genetic algorithms start with a “pool” of possible solutions that evolve and mutate over time until reaching a stopping point.

- GPU: Short for “graphics processing unit,” a specialized hardware device used in computers, smartphones, and embedded systems originally built for real-time computer graphics rendering. However, the ability of GPUs to efficiently process many inputs in parallel has made them useful for a wide range of applications—including training AI models.

- Hash: The result of a mathematical function known as a “hash function” that converts arbitrary data into a unique (or nearly unique) numerical output. In facial authentication, for example, a complex hash function encodes the identifying characteristics of a user’s face and returns a numerical result. When a user attempts to access the system, their face is rehashed and compared with existing hashes to verify their identity.

- Image enrichment: The use of AI and machine learning to perform automatic “enrichment” of visual data, such as images and videos, by adding metadata (e.g. an image’s author, date of creation, or contents). In the media industry, for example, image enrichment is used to quickly and accurately tag online retail listings or new agency photos.

- Image quality control: The use of AI and machine learning to perform automatic quality control on visual data, such as images and videos. For example, image quality control tools can detect image defects such as blurriness, nudity, deepfakes, and banned content, and correct the issue or delete the image from the dataset.

- Image recognition: A subfield of AI and computer vision that seeks to recognize the contents of an image by describing them at a high level. For example, a trained image recognition model might be able to distinguish between images of dogs and images of cats. Image recognition is contrasted with image segmentation, which seeks to divide an image into multiple parts (e.g. the background and different objects).

- Image segmentation: A subfield of computer vision that seeks to divide an image into contiguous parts by associating each pixel with a certain category, such as the background or a foreground object.

- Industrial Internet of Things (IIoT) :The use of Internet of Things (IoT) devices in industrial and manufacturing contexts. IIoT devices can be used to inspect industrial processes, detect flaws and defects in products and manufacturing equipment, promote workplace safety by detecting the use of personal protective equipment (PPE), and much more.

- Inference: The use of a trained machine learning model to make predictions about a previously unseen dataset. In other words, the model infers the dataset’s contents using what it has learned from the training set.

- Internet of Things/IoT: A vast, interconnected network of devices and sensors that communicate and exchange information via the Internet. As one of the fastest-growing tech trends (with an estimated 127 new devices being connected every second), the IoT has the potential to transform industries such as manufacturing, energy, transportation, and more.

- JSON response: A response to an API request that uses the popular and lightweight JSON (JavaScript Open Notation) file format. A JSON response consists of a top-level array that contains one or more key-value pairs (e.g. { “name”: “John Smith”, “age”: 30 }).

- Labeling: The process of assigning a label that provides the correct context for each input in the training dataset, or the “answer” that you would like the AI model to return during training. In computer vision, there are two types of labeling: annotation and tagging. Labeling can be performed in-house or through outsourcing or crowdsourcing services.

- Liveness detection: A security feature for facial authentication systems to verify that a given image or video represents a live, authentic person, and not an attempt to fraudulently bypass the system (e.g. by wearing a mask of a person’s likeness, or by displaying a sleeping person’s face). Liveness detection is essential to guard against malicious actors.

- Machine learning: A subfield of AI and computer science that studies algorithms that can improve themselves over time by gaining more experience or viewing more data. Machine learning includes both supervised learning (in which the algorithm is given the expected results or labels) and unsupervised learning (in which the algorithm must find patterns in unlabeled data).

- Machine translation: The use of computers to automatically translate text from one natural (human) language to another, without assistance from a human translator.

- Machine vision: A subfield of AI and computer vision that combines hardware and software to enable machines to “see” at a high level as humans can. Machine vision is distinct from computer vision: a machine vision system consists of both a mechanical “body” that captures images and videos, as well as computer vision software that interprets these inputs.

- Metadata: Data that describes and provides information about other data. For visual data such as images and videos, metadata consists of three categories: technical (e.g. the camera type and settings), descriptive (e.g. the author, date of creation, title, contents, and keywords), and administrative (e.g. contact information and copyright).

- Motion tracking: A subfield of computer vision with the goal of following the motion of a person or object across multiple frames in a video.

- Natural language processing (NLP): A subfield of computer science and artificial intelligence with the goal of making computers understand, interpret, and generate human languages such as English.

- Near-edge AI: The deployment of AI systems on the “near edge,” i.e., computing infrastructure located between the point of data collection and remote servers in the cloud.

- Neural network: An AI and machine learning algorithm that seeks to mimic the high-level structure of a human brain. Neural networks have many interconnected artificial “neurons” arranged in multiple layers, each one storing a signal that it can transmit to other neurons. The use of larger neural networks with many hidden layers is known as deep learning.

- No-code AI: The use of a no-code platform to generate AI models without the need to write lines of computer code (or be familiar with computer programming at all).

- Object recognition: A subfield of computer vision, artificial intelligence, and machine learning that seeks to recognize and identify the most prominent objects (i.e., people or things) in a digital image or video.

- Optical character recognition (OCR): A technology that recognizes handwritten or printed text and converts it into digital characters.

- Overfitting: A performance issue with machine learning models in which the model learns to fit the training data too closely, including excessive detail and noise. This causes the model to perform poorly on unseen test data. Because overfitting is often caused by a lack of training data, techniques such as data augmentation and synthetic data generation can help alleviate it.

- Pattern recognition: The use of machine learning methods to automatically identify patterns (and anomalies) in a set of input data.

- Pre-trained model: An AI model that has already been trained on a set of input training data. Given an input, a pre-trained model can rapidly return its prediction on that input, without needing to train the model again. Pre-trained models can also be used for transfer learning, i.e. applying knowledge to a different but similar problem (for example, from recognizing car manufacturers to truck manufacturers).

- Presentation attack: An attempt to thwart biometric systems by spoofing the characteristics of a different person. With facial recognition software, for example, presentati

on attacks may consist of printed photographs or 3D face masks presented to the camera by the attacker. Techniques such as liveness detection are necessary to avoid presentation attacks.

on attacks may consist of printed photographs or 3D face masks presented to the camera by the attacker. Techniques such as liveness detection are necessary to avoid presentation attacks. - Recurrent neural network (RNN): A special type of neural network that uses the output of the previous step as the input to the current step. RNNs are best suited for sequential and time-based data such as text and speech.

- Reinforcement learning: A subfield of AI and machine learning that teaches an AI model, using trial and error, how to behave in a complex environment in order to maximize its reward.

- Robotic process automation (RPA): A subfield of business process automation that uses software “robots” to automate manual repetitive tasks.

- Robotics: An interdisciplinary field combining engineering and computer science that seeks to build intelligent machines known as “robots,” which have bodies and can take actions inthe physical world.

- Segmentation: A subfield of AI and computer vision that seeks to divide an image or video into multiple parts (e.g. the background and different objects). For example, an image of a crowd of people might be segmented into the outlines of each individual person, as well as the image’s background. Image segmentation is widely used for applications such as healthcare (e.g. identifying cancerous cells in a medical image).

- Sentiment detection: A subfield of AI and computer vision that seeks to understand the tone of a given text. This may include determining whether a text has a positive, negative, or neutral opinion, or whether it contains a certain emotional state (e.g. “sad,” “angry,” or “happy”).

- Strong AI: A synonym for artificial general intelligence (AGI). “Strong AI” refers to a theoretical AI model that could duplicate or even surpass human capability across a wide spectrum of activities, serving as a machine “brain.”

- Structured data: Data that adheres to a known, predefined schema, making it easier to query and analyze. Examples of structured data include student records (with fields such as name, class year, GPA, etc.), and daily stock prices.

- Supervised learning: A subfield of machine learning that uses both input data and the expected output labels during the training process. In this way, the computer can easily identify and correct its mistakes.

- Synthetic data: Realistic but computer-generated image data that can be used to increase the size of your datasets during AI training. Using a 3D model and its associated texture, synthetic data (and the corresponding annotations or bounding boxes) can be generated with a wide variety of poses, viewpoints, backgrounds, and lighting conditions.

- Tagging: The process of labeling the input data with a single tag in preparation for AI training. Tagging is similar to annotation, but uses only a single label for each piece of input data. For example, if you want to perform image recognition for different dog breeds, your tags may be “golden retriever,” “bulldog,” etc.

- Transfer learning: A machine learning technique that reuses a model trained for one problem on a different but related problem, shortening the training process. For example, transfer learning could apply a model trained to recognize car makes and models to identify trucks instead.

- Turing test: A metric proposed by Alan Turing for assessing a machine’s “intelligence” by testing whether it can convince a human questioner that it is a person and not a computer.

- Unstructured data: Data that does not adhere to a predefined schema, making it more flexible but harder to analyze. Examples of unstructured data include text, images, and videos.

- Unsupervised learning: A subfield of machine learning that provides only input data, but not the expected output, during the training process. This requires the computer to identify hidden patterns and construct its own model of the data.

- Video analytics: The use of AI and computer vision to automatically analyze the contents of a video. This may include facial recognition, motion detection, and/or object detection. Video analytics is widely used in industries such as security, construction, retail, and healthcare, for applications from loss prevention to health and safety.

- Visual AI :The use of artificial intelligence to interpret visual data (i.e. images and videos), roughly synonymous with computer vision.

- Weak AI: A synonym for artificial narrow intelligence (ANI). “Weak AI” refers to an AI model that focuses on equaling or surpassing human performance on a particular task or set of tasks, with an intentionally limited scope.

Want to learn more about computer vision services from Chooch AI? Contact us for computer vision consulting.